University of Washington linguistics professor Emily Bender gave a lecture titled “ChatGP — Why, When, if Ever, is Synthetic Text Safe, Appropriate and Desirable?” Sept. 14. The University of Wisconsin Language Institute invited Bender to provide insight into the history of large language models like ChatGPT, the divide between form and meaning, the threats LLMs pose and how to responsibly use them in her talk.

The first language models were “corpus models,” Bender said. These were for predicting, based on the sample of text or voice, how likely some phrase appears. They were also used for relatively basic tasks like ranking spelling correction candidates and simplifying text entry on nine-key phones.

The next natural step was the creation of “neural nets,” Bender said. Unlike corpus models, neural nets can use “word embeddings” which allow them to tell if two words have similar meanings. As a result, neural nets are good at things like checking grammar and spelling, extending search results and translation.

Large language models, which use large databases of text to formulate responses that imitate thoughtful language, were developed last.

“This stuff is not artificial intelligence, under any [common] definition of that term, and it’s definitely not artificial general intelligence, but it looks like it,” Bender said.

The difference between a true AI or AGI and an LLM like ChatGPT lies in the divide between form and meaning and a lack of general understanding. According to Bender, in linguistics, form is the observable parts of language, from marks on paper to lights on screens, whereas meaning links the form to something external like reasoning, emotion or perception.

LLMs are good at form, but not so much at meaning. They quite literally don’t understand what they are talking about, which worsens the issues highlighted in Bender’s research on LLMs.

“[An LLM is] a system for haphazardly stitching together linguistic forms from its vast training data, without any reference to meaning — a stochastic parrot,” Bender said.

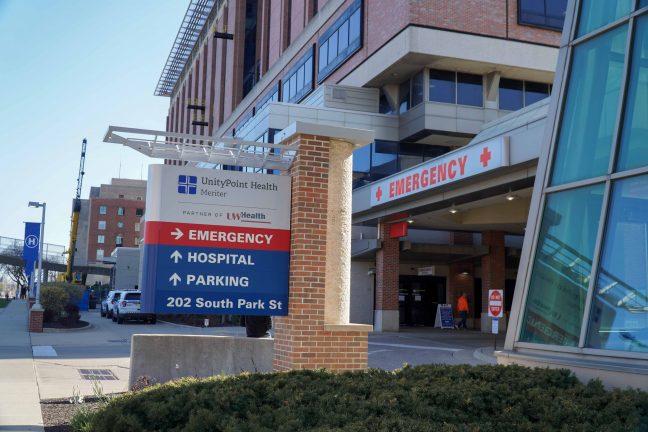

Bender’s paper examines LLMs and predicts many of the issues society has with LLMs today, the core of which are exploitative labor practices, biased training data, data theft and a near-complete lack of accountability.

According to Bender, to responsibly make use of ChatGPT and other LLMs, three things must be true — content isn’t important, data theft doesn’t occur and authorship doesn’t matter.

“There is no ‘who’ with ChatGPT … algorithms are not the sorts of things that can be held accountable,” Bender said.

Possible ethical uses of LLMs include artificial language learning partners, non-player characters in video games and short form writing support.

To make LLMs safe, as a society people need to insist on labor rights, regulation, transparency and especially accountability, Bender said. On a personal level, people can all be more aware of what they are reading, who wrote it, why it was written and if it’s accurate.