Every week, The Lab Report will feature a different undergraduate-research assistant on campus and their experience in the lab.

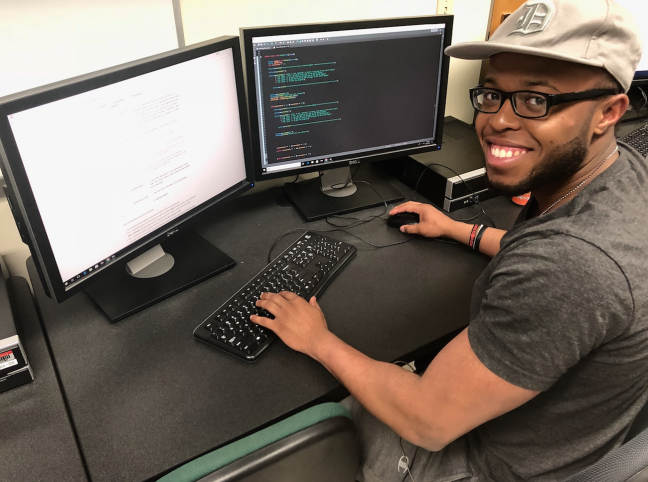

University of Wisconsin senior Brandon Nwadinobi is using his computer science and data analysis abilities to help investigate bias in research and reasons for female, racial and ethnic minority scientists’ underrepresentation in research careers.

Nwadinobi is a senior majoring in molecular biology and studying for a certificate in computer sciences. He is an undergraduate data specialist working with Anna Kaatz, the director of computational sciences at the UW Center for Women’s Health Research.

Kaatz’s current projects involve the development of curriculum to help women and minority scientists advance to leadership, development of data and text-mining algorithms to test for race and gender bias in National Institute of Health’s peer review process, and development of interventions to reduce race and gender bias in medical schools.

Nwadinobi has made website crawlers, text miners and other general web automation programs to make this work much easier and more efficient. He’s also helped with a number of Kaatz’s studies. A recent one looked into inconsistencies in grant peer review and how it may affect female, racial and ethnic minority scientists.

“Racism, sexism, these forms of bias are systemic and they even go far into academia,” Nwadinobi said. “A lot of minority and women researchers face severe barriers to progression in their field because they can’t get renewed funding for their research.”

Grant peer review is the primary means by which scientists secure funding for their research programs, Nwadinobi said. The study investigated the NIH — the largest funding agency for biomedical, behavioral and clinical research in the U.S.

As part of this process to allocate money to scientists, collaborative peer review panels of expert scientists evaluate grant applications and assign scores that affect later funding decisions NIH governance makes, according to the study.

Nwadinobi’s data analysis work helped answer three questions:

- Do different panels of reviewers score the same applications similarly?

- How do reviewers establish and maintain common ground in interactions during the meeting?

- Is there a relationship between this grounding process and scoring variability?

Because actual NIH peer review meetings are highly sensitive, this study simulated the process as closely as possible.

Scientists who had experience reviewing for the NIH were recruited to participate and staff from NIH’s Center for Scientific Review, along with a highly experienced, retired Scientific Review Officer, were consulted for every methodological decision.

The independent score individual reviewers assigned varied substantially prior to convening for the panel meeting. This variability reduced within the panel from collaboration during peer review meetings. However, the variability between panels increased during discussion.

Overall, the study found there is little agreement among individual reviewers in how they independently score scientific grant applications. Reviewers belonging to the same panel agree more with one another about an application’s score only after collaborating together, and separate panels reviewing the same proposals do not agree with one another.

This study showed the subjectivity present in the peer review process and that the reliability of peer review needs to be enhanced, Nwadinobi said.

“[Researchers] limits are not being defined by their merits,” Nwadinobi said.

This study serves as a stepping stone for future studies examining how the race/ethnicity and gender of applicants and of reviewers affect the peer review process.

Nwadinobi is interested in how the bias of reviewers affects the variation of these scores and in exploring potential factors influencing the race- and gender-based biases that others have found to occur in NIH peer review.

“Everyone has biases,” Nwadinobi said. “Without our self-awareness, our associations affect our thinking and implicit biases can have serious, real-world effects.”

Nwadinobi is currently working on another project with Kaatz and is developing data- and text-mining algorithms which collect information from an NIH database to be used to test for race and gender bias in the NIH’s peer review process.

Diversity in science is important because our current body of work reflects the makeup of the research community, Nwadinobi said. There is a lack in studies investigating issues that affect women and ethnic minorities, Nwadinobi said.

“Research builds upon previous research. For female and minority-focused research, there is a limit to what can be built off of,” Nwadinobi said. “It’s like an exponential curve. As it grows bigger, it grows faster. For female and minority-focused research it’s still at the low end. It hasn’t gotten to the point where it can take off.”