CONTENT WARNING: Discussion of suicide and/or self harm. If you have are experiencing thoughts of suicide or self harm, dial 988 to reach the Suicide & Crisis Lifeline. View options for mental health services on campus through University Health Services.

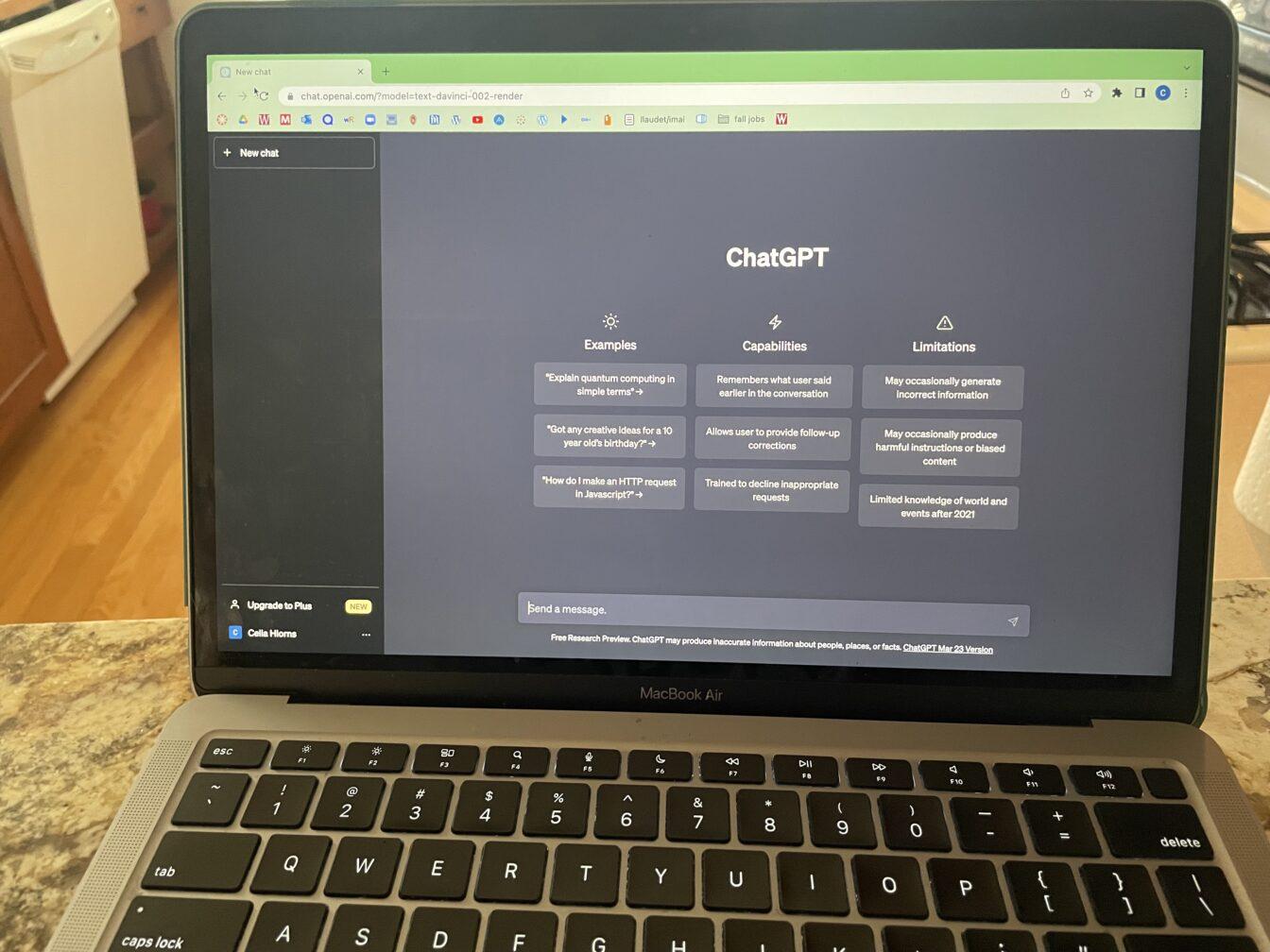

Since the technology’s launch in late 2022, ChatGPT is quickly becoming one of the fastest-growing apps of all time. The new technology has several uses though recently, some have been exploring it as a fast, accessible alternative to therapy. Could ChatGPT serve as a therapy alternative, or are there too many risks in addressing complexities of mental health?

Point: AI can be an accessible solution to the therapist shortage

In the first two months of its release, ChatGPT gained over 100 million users, compared to the nine months it took for TikTok to cross that same threshold. The app was developed by the company OpenAI as a chatbot that can process and respond to prompts given by users.

Most news surrounding ChatGPT has been with respect to how the technology has been used by students. Northern Illinois University’s research into the capabilities of ChatGPT shows the app can craft essays, write emails and solve relatively complex equations when prompted.

But, the app could have uses beyond the classroom. There are now conversations regarding AI’s future role in society, namely in the medical field and mental health. With technology adapting to provide quick and increasingly human-like responses to users’ prompts, many are now wondering if it could be the solution to the world’s mental health professional shortage.

There is a clear shortage of therapists in the U.S. and around the world. A map released by the Rural Health Information Hub shows that the majority of counties in the U.S. are facing a mental health professional shortage. Over 150 million Americans live in a federally designated mental health professional shortage area, leaving many without reliable access to help.

This is an even more prominent issue when expanded to the international level. According to the World Health Organization, there has been a 13% increase in mental health issues worldwide over the last decade, with 20% of the world’s child and adolescent population struggling with some form of mental health condition.

AI technology like ChatGPT presents an opportunity to broaden access and definitions of mental health support in an increasingly necessary way.

This is not to say that apps like ChatGPT are prepared to handle every mental health condition or situation. Mental health is a broad and extensive issue that requires a nuanced system of resources for struggling individuals. For some, the ease of access and instantaneous responses that AI offers could be very beneficial if the software was programmed with adequate medical information.

But, AI inherently lacks certain things that only a face-to-face connection with a medical professional can provide — such as reading clients’ behavioral or social cues, gathering important life or medical contexts that might go unnoticed by a bot and displaying compassion or empathy in a way that no technological alternative could replicate.

Along these lines, there are some mental health crises that AI technology like ChatGPT should never be in charge of handling. Technological therapy is more often than not a lesser alternative for individuals struggling with mental health, but for those facing an immediate crisis, on-the-ground human judgment might be critical.

But, with mental health issues on the rise worldwide and a critical shortage of qualified professionals, the world needs new and innovative solutions to combat the current mental health crisis. AI could be the best way to ease the burden for medical workers by tending to those with concerns that can be addressed quickly and without person-to-person communication.

Fiona Hatch ([email protected]) is a senior studying political science and international studies.

Point-counterpoint: Decison to appoint new Wisconsin secretary of state

Counterpoint: The risks of using ChatGPT are too great

The utilization of ChatGPT as a source of therapy should be used very sparingly, if at all, because of the danger it presents to users. After texting with an AI chatbot for a few weeks, a Belgian man died by suicide. Though this is likely not a widespread experience with AI chatbots, it does show the potential dangers of turning to an unreliable AI bot for support.

ChatGPT is not a trained therapist and can give individuals inaccurate information, especially about emotional issues. ChatGPT creators specially designed policies to not diagnose people with health conditions or provide medical information.

Additionally, ChatGPT is not always accurate in its responses to objective questions. As AI, it cannot truly comprehend the questions people are asking. AI doesn’t always understand the meaning or intent behind a user’s words. So when it comes to complex issues of mental health and therapy, AI can’t be fully accurate or offer the best advice.

Getting therapy over the Internet became incredibly popular during the pandemic. Apps like BetterHelp aided individuals in getting mental health assistance for a fraction of the cost in the comfort of their own homes. But, even BetterHelp had its issues.

Users called “therapists” who operated on BetterHelp unprofessional, claiming they gave them bad advice that harmed their mental health instead of improving it. Additionally, the app came under fire for sharing customers’ personal data on social media apps, according to the Federal Trade Commission. These issues with BetterHelp, which uses real people to communicate with customers, could happen with AI chatbots used for therapy like ChatGPT.

There is no promise that ChatGPT can keep users’ information confidential, especially information that could be considered incredibly private. Also, AI chatbots can be less professional than BetterHelp therapists because they aren’t real people. There is no promise that ChatGPT will give people good advice that will actually help them cope with trauma or other mental health issues.

There are certainly areas where ChatGPT could be used in a therapeutic-like setting. For example, a quick question about how to respond to a difficult text or asking for examples of coping mechanisms could be helpful. When a full therapy session is not needed, AI could be used for issues that a person could address later with more detail in a therapy session. Using ChatGPT for continual therapeutic advice, however, could be dangerous.

Therapy is incredibly personal and needs to be well-tailored to an individual to be effective. Therapists and their clients form intense personal relationships, and that relationship simply cannot be mimicked with ChatGPT or even over text with a real human with BetterHelp. Mental health treatment can be incredibly expensive in America, but that treatment is effective.

ChatGPT could have its benefits in certain situations with a quick question, but for long-term, complex mental health support, ChatGPT should not be used. The AI chatbot is not designed to be used for mental health treatment, so individuals should stay clear of communicating with ChatGPT in that way.

Emily Otten ([email protected]) is a junior majoring in journalism.

Resources regarding suicide prevention and mental health:

- 988 Suicide & Crisis Lifeline: 988 https://988lifeline.org/help-yourself/loss-survivors/

- Crisis Text line: Text HOME to 741741 https://www.crisistextline.org/

- Survivors of Suicide (SOS) support group: https://www.uhs.wisc.edu/prevention/suicide-prevention/

- Trevor Lifeline: https://www.crisistextline.org/ crisis intervention and suicide prevention services to lesbian, gay, bisexual, transgender, queer and questioning (LGBTQ) young people under 25

- 24/7 crisis support 608-265-5600 (option 9)