The University of Wisconsin Department of Computer Sciences announced that the National Science Foundation awarded UW’s Center for High Throughput Computing a $2.2 million grant for its participation in an effort to upgrade global computing infrastructure.

According to a press release from the UW Department of Computer Science, the grant will go directly to work being done on the Large Hadron Collider by a team of several full-time CHTC staff that spend all or most of their time working on the Open Science Grid, a global distributor of computing for scientific research.

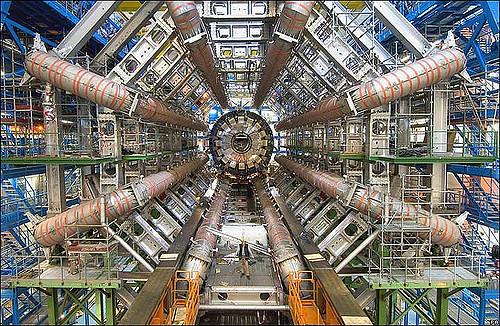

The grant is part of a larger $25 million NSF award for The Institute for Research and Innovation in Software in High Energy Physics. The grant will be distributed among a total of 17 institutions, including other branches of the OSG. The upgrade will serve to more efficiently process the increased amount of data that will come from the Large Hadron Collider in Geneva, Switzerland, once it has undergone an upgrade of its own, slated for 2026, according to the press release.

The Large Hadron Collider — soon to be the High-Luminosity Large Hadron Collider — has been interconnected with UW since before the machine started operations in 2008. Tim Cartwright, UW computer sciences staff and chief of staff for the OSG, shed light on the depth of this connection.

“Our physics department has been involved from the beginning,” Cartwright said. “We have the CHTC which is the birthplace of High Throughput Computing … For a long time, the high energy physics community was the real driver of need for massive scale computing. The software has grown to meet the challenges.”

The CHTC, established at Madison in 1988, has about twenty full-time academic staff, most of whom work with software development.

A lot of the work of the CHTC involves collaborating with research groups who use the organization’s computing infrastructure. Hundreds of research groups per year make use of the CHTC’s resources, which are similar to but distinct from High Performance Computing.

“High Performance Computing focuses on numbers of computing operations that can be performed in a second,” said Cartwright. “With High Throughput Computing, we care more about how much computing you can get done in a year.”

High Throughput Computing is a way to deal with large-scale computing that doesn’t need the tightly interconnected web of computing that HPC deals with to simulate, for example, weather forecasts. It handles computing workloads in which the individual parts are largely independent of each other, which happens to fit the bill for the LHC’s needs perfectly.

Tulika Bose, UW physics professor and researcher at the LHC, said the upgrade from LHC to HL-LHC will mean the need to process a lot more data.

“Currently [within the LHC], collisions between bunches of protons happen every 25 nanoseconds…that’s 14 million times a second,” Bose said. “It is humanly impossible to write out data at this rate.”

Bose and colleagues have designed a filtering system called a “trigger” that evaluates information coming from the LHC and evaluates whether or not the information is important enough to be saved for future investigation.

The trigger must narrow the 14 million particle collisions that happen every second down to only 1,000 that are saved. Events that are not recorded can never be recovered, according to Bose.

“Events you have thrown away are gone for good, so the selection processes within the trigger are really, really critical,” Bose said. “They define what kind of physics you can do.”

With the hardware upgrade, the number of events will only increase. Cartwright said projected computing and storage needs for the upgrade will be roughly 10 times what is available today. Part of the goal is to try to reduce the currently predicted shortfall in computing resources by using innovative algorithms that will allow for more actual processing and less processing effort.

The OSG software team is focused mainly on bringing together pieces of software contributed by other institutions involved with the IRIS-HEP project to form what Cartwright calls the “fabric of production services.”

“The OSG runs a lot of the central services that are used by all of the institutions,” Cartwright said. “The software, coming from all over the globe, will need to be integrated very tightly and guided into its production state.

The Higgs Boson particle, predicted by theories decades before its discovery, was discovered at the LHC in 2012. Bose said there is still more to learn and understand by measuring its properties.

Additionally, Bose hopes to learn more about dark matter and dark energy with the HL-LHC, and she hasn’t ruled out the discovery of other particles like the graviton, a yet undiscovered elementary particle that is linked to gravity in the way that the Higgs Boson is linked to mass.

“Maybe to access some of these new particles, we need to take a step up,” Bose said. “We need more luminosity, more collisions, and maybe in those conditions, we can discover something new.”